I spend hours on Reddit tracking how real teams wrestle with their knowledge bases. Not for entertainment, but because Reddit comments reveal truths that vendor case studies never will. When someone posts “our AI chatbot hallucinates 40% of the time and we can’t turn it off,” that’s data you won’t find in a whitepaper.

I’m interested in understanding how businesses organize their know-how, how-to’s, and the many types of documentation they create. So interested, in fact, that I built a business around making it better for a certain type of company: allymatter.com.

In recent months I wondered what people are saying about how they use knowledge bases and how AI has affected how they store and retrieve information within their organizations. While AI in knowledge bases is interesting, the challenges they face using their internal knowledge bases—apart from AI-induced problems and improvements—have also changed in the past few years or even months.

These are a select few comments I have collated to get a better understanding and evidence of frustrations and delight that I’ve seen. This is not a scientific survey, nor does it recommend you to take certain actions or refrain from taking advantage of AI.

When teams report 70% ticket deflection rates, we’re discussing substantial cost savings. The question isn’t whether AI will transform documentation. It’s whether your organization will navigate it successfully or become a cautionary tale.

Use of multiple types of tools

Organizations use multiple tools internally to store information specific to each department, client-specific storage tools, specialist tools (such as ProjectWise in this example). They’ve stitched it all together with OneNotes, and Word files, and Excel files as well. It might be a nightmare to manage all of this, and the know-how to manage this also probably needs a document.

Without a central knowledge base, even if used to link documents outside of it, sharing information effectively becomes harder.

AI in knowledge bases

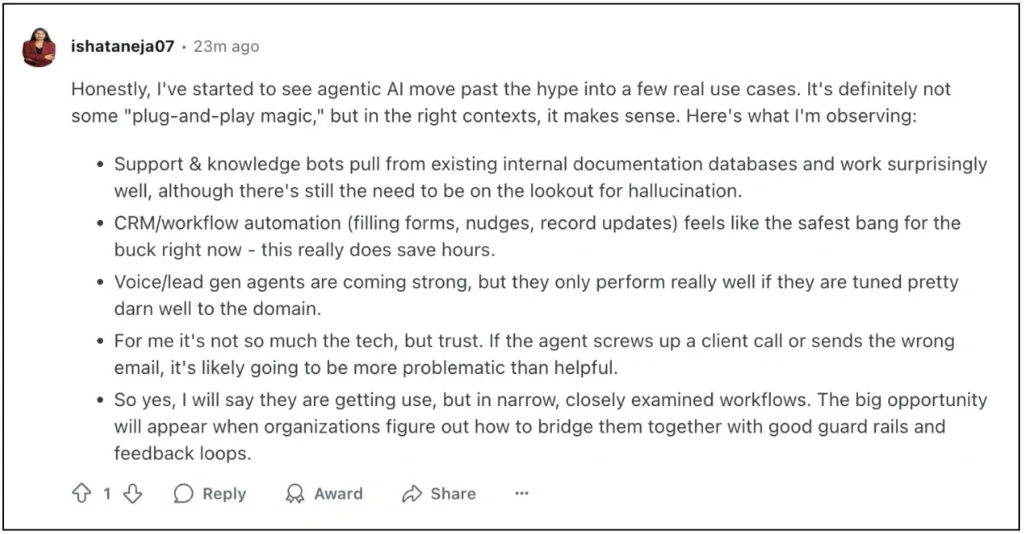

Based on dozens of Reddit threads and hundreds of comments, here’s what’s actually happening when organizations deploy AI in their documentation systems.

Lack of integration

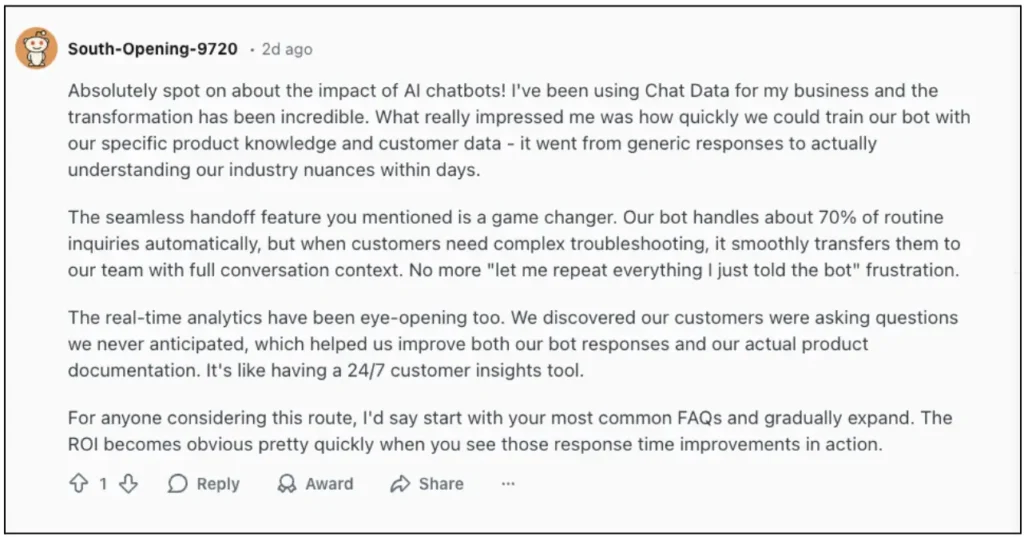

The use of AI has become widespread and in many cases a force-multiplier, an effective customer query answering machine, and a ticket-deflector for multiple organizations.

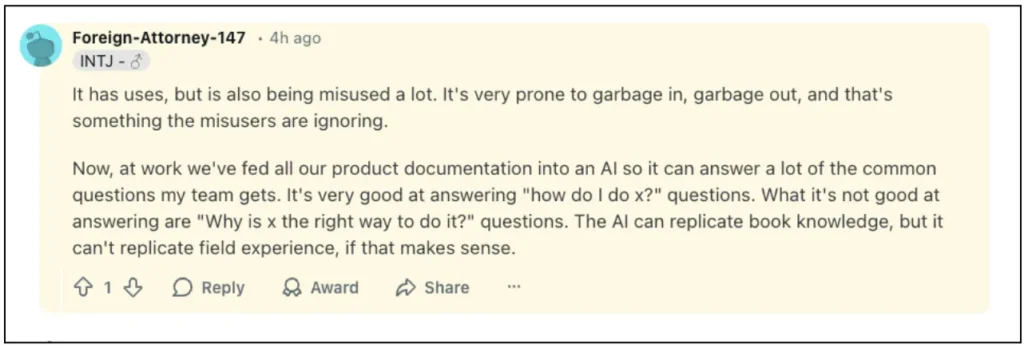

Knowledge bases in their current avatar lack integration features that bring in or distribute timely information to other tools an organization might use. The most prevailing sentiment of AI in knowledge management is how it hallucinates information, its limited ability to accurately summarize information. We see this theme across multiple comments, some of which have been showcased here.

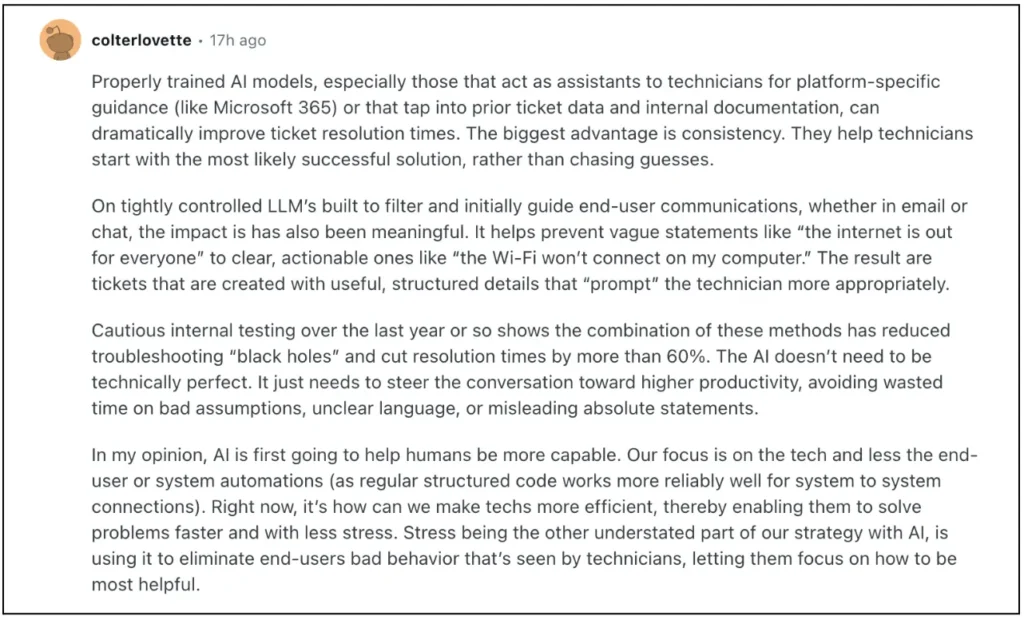

Ticket resolution and ticket deflection

AI can certainly do better with access to information than a simple integration with a knowledge base. Given access to past tickets it outperforms simple chatbots and can reduce the number of helpdesk tickets or deflect them without the need to talk to an expensive human technician.

But do note that even in the comment above that it is used for simpler queries and not overtly complex ones, i.e. the ones post-millennial or even millennial users tend to contact the helpdesk for.

But when it works for some, it works well enough to deflect 70% of tickets. Deflecting 70% of the tickets is a huge game changer for almost all organizations. The amount of cost savings overcomes the minor complaints people will have about talking to a lifeless bot. The added advantage of gaining insights from the queries people ask AI-driven chatbots, questions people would normally not ask a human agent is the cherry on top.

Is 70% realistic? According to Freshworks’ 2025 CX Benchmark Report, AI agents deflect 45% of queries on average, with some industries hitting 50% or higher. The Reddit commenter’s 70% is on the high end, but not unheard of when the implementation is done right.

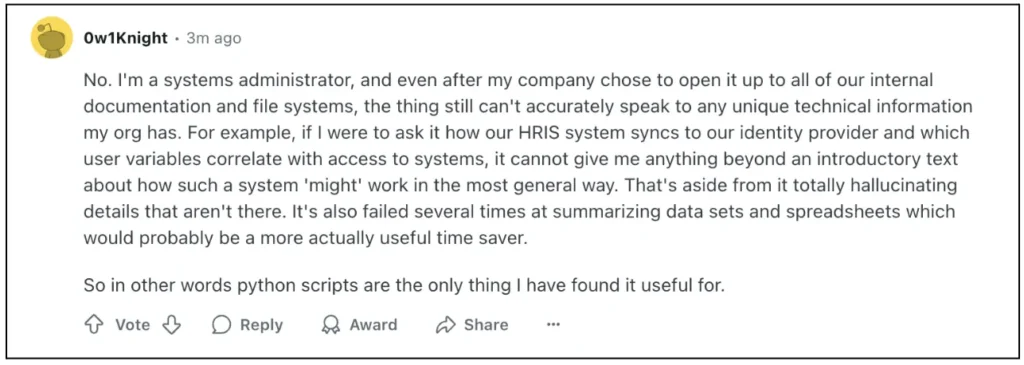

Hallucinations galore

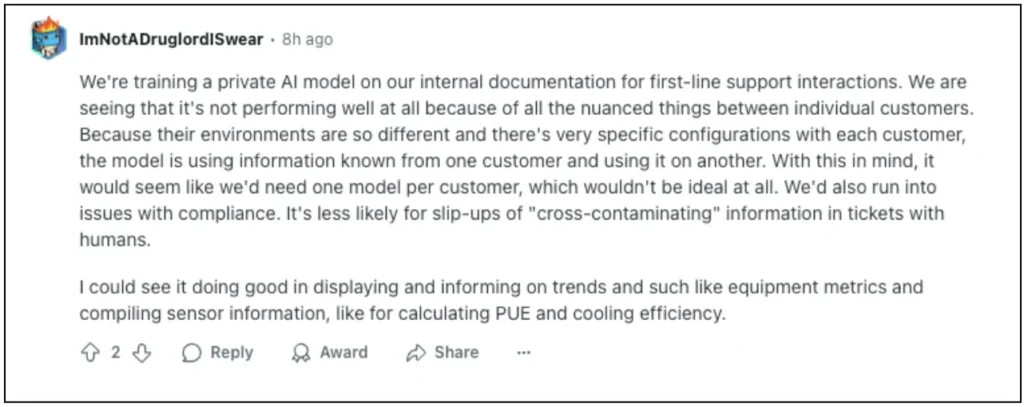

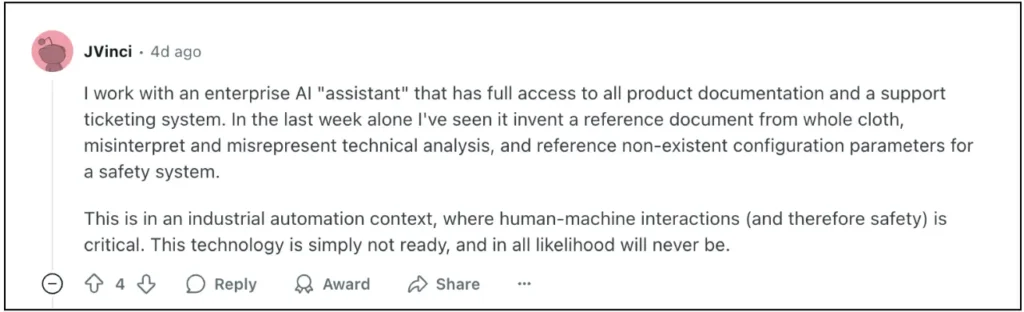

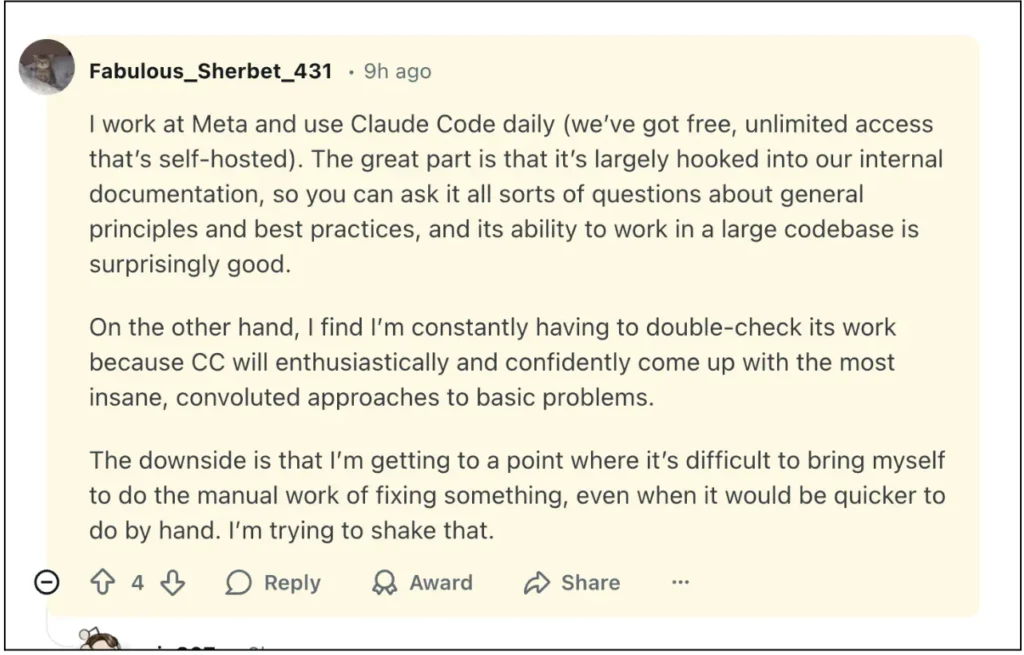

All AI models hallucinate for most out-of-the-box implementations. They also hallucinate more than most organizations are comfortable with in custom implementations.

The data backs this up. Even the best-performing AI models show hallucination rates ranging from 3-16% on factual tasks, according to AIMultiple research. For companies in regulated industries, even a 3% error rate can mean compliance nightmares.

We see in this representative comment above the challenges with AI built on internal documentation for first-line support. In my opinion, AI will fare better in generic help-desk needs for say a simple SaaS company. Customers asking “How do I upload a file” to an AI chatbot can get a straight answer since it is assumed that all customers can only upload a file in one manner with no customizations. But as soon as you have more than one environment—say a custom design company’s chat bot being asked “will this particular panel meet the county fire regulations?”—the chances that the AI will hallucinate (even with a very custom implementation of AI) are high. How high? High enough to increase your insurance rates since a single instance of a fire will very likely lead to litigation, regulatory issues and strife with customers.

Sometimes it is just plain wrong. There is no escaping that reality with current AI models unless you customize them very specifically to your needs, which only reduces their error-prone nature but does not completely eliminate it.

In some industries, completely avoiding it till maybe a next generation of models improve it to near-human accuracy or near-human ethical and moral standards of not lying or making up things is achieved.

It defeats the purpose in many cases. When you have to double check everything it says when it writes articles in internal knowledge bases, are you really unburdening yourself of important tasks at all?

Some say hallucinations happen when the knowledge base lacks information. Even the commenter above agrees AI handles common questions well. If your helpdesk mostly gets those types of questions, AI on your knowledge base makes sense. Just make sure you integrate all the information sources the AI needs to answer accurately.

There are better ways to implement it as this next commenter explains:

The hallucination challenge still exists but is mitigated by guard rails and feedback loops. Have an expert human review everything the AI tells users. Correct answers quickly and feed that information back to the model. This human-in-the-loop cycle ensures continuous improvement. Train the AI to refuse questions that could cause significant harm if answered incorrectly.

In any case, train it to be precise and provide accurate and simple answers. Do not let it run wild in your documentation.

SharePoint is never the answer

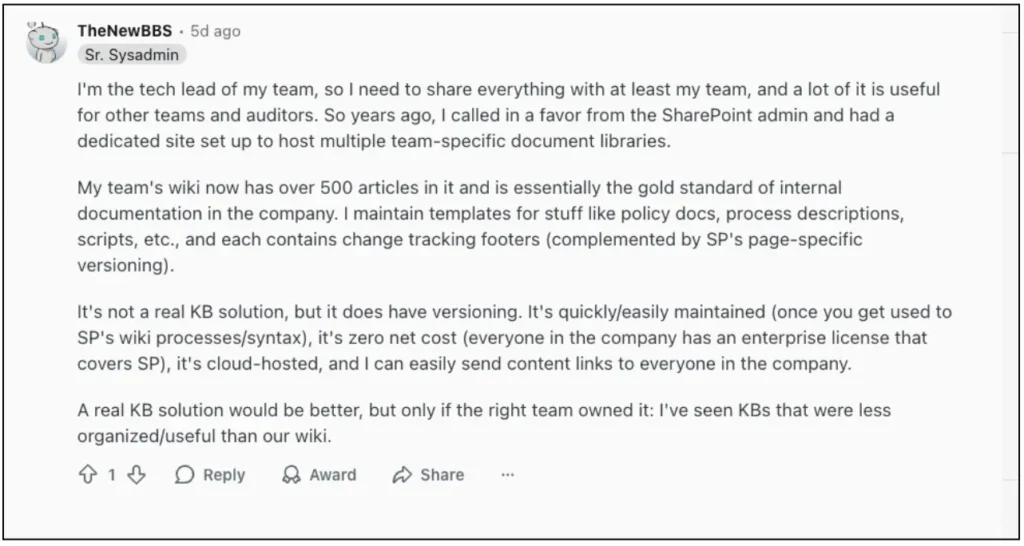

Hundreds of thousands of organizations depend heavily on SharePoint since it’s readily available with every subscription of Office365. Some (as the next commenter says) use it quite effectively.

In my experience though, it is not the answer unless you have the dedicated resources very specific to SharePoint and working on SharePoint. The post-millenial workforce expects their knowledge base to be easy to use and very intuitive. Even a web 2.0 looking app shall be far more easy to use.

SharePoint is cumbersome and very non-intuitive. As a personal aside, I’ve used SharePoint to organize knowledge. Even Microsoft’s feature-free OneNote beats SharePoint in usability and intuitiveness.

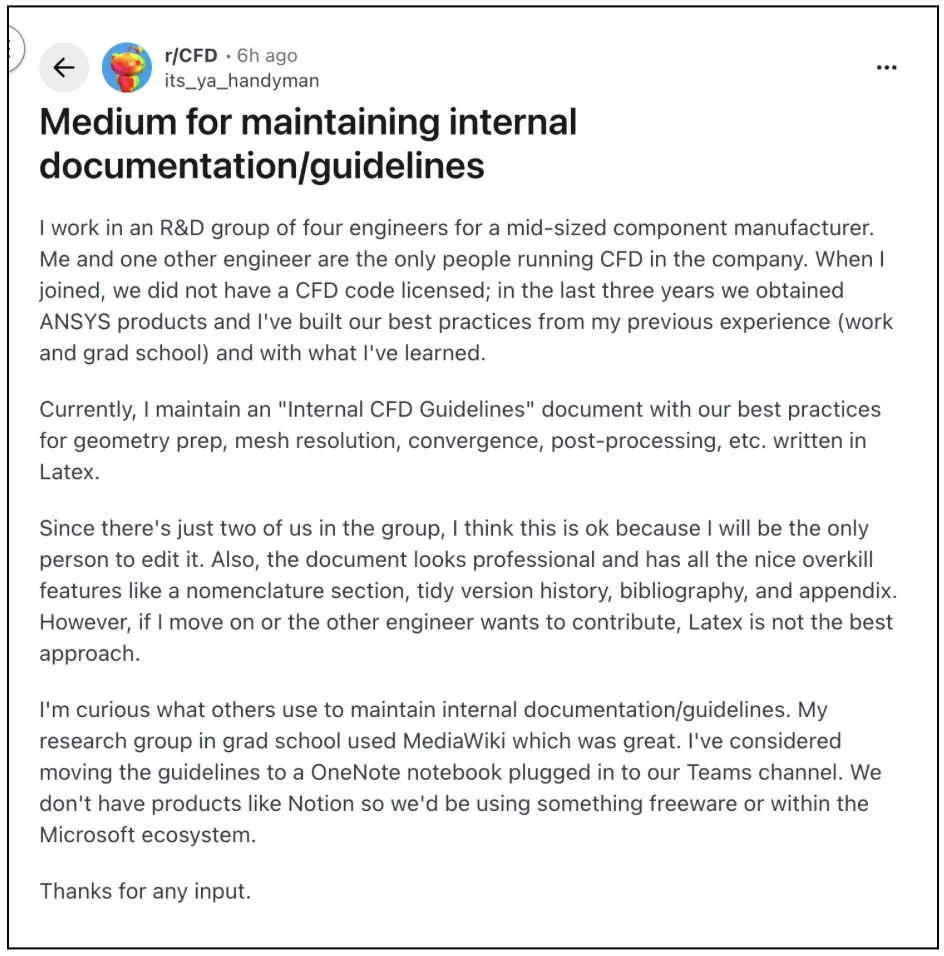

Another example of the user choosing OneNote since it is available and already part of the licensing package. This might work in a smaller environment but will soon become a nightmare once more than a few users start writing how-tos and guides.

Nobody reads your documentation

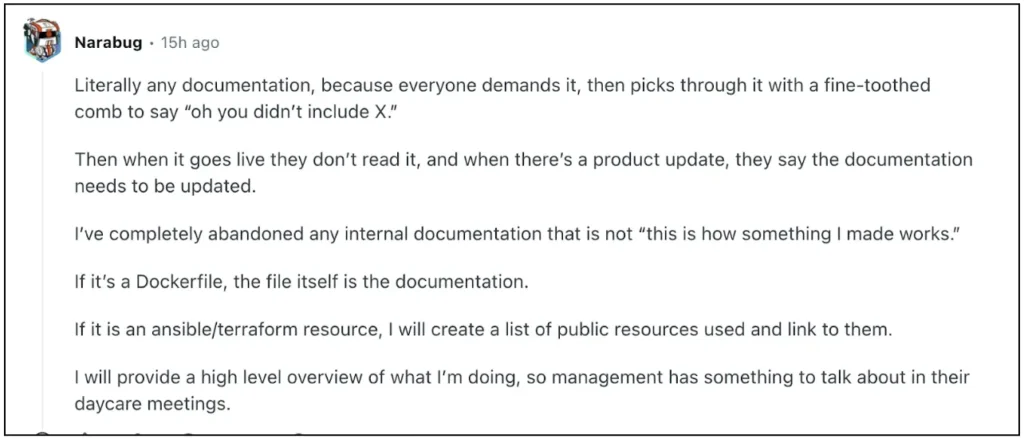

At least internally, once it goes live, nobody reads your documentation. Quite a common complaint with most knowledge bases is that nobody wants ownership of the documentation apart from the poor technical writer. Knowledge bases are an afterthought in most organizations. In many cases, customers reach out to a human agent before reading the documentation, but that is a discussion for another article.

As this user aptly puts it, nobody reads the documentation. Engineering leaders do not read the article on how to set up an environment, or how the system architecture works after it is written for the first time. These two specific articles have an outsized impact on employee retention and time-to-productivity for a new employee.

Most knowledge bases do not have settings to automatically remind owners of the document about the freshness of the document or have an audit feature to understand who has written the doc and who has approved it. All of that information is in the email ether with no chances of showing up ever.

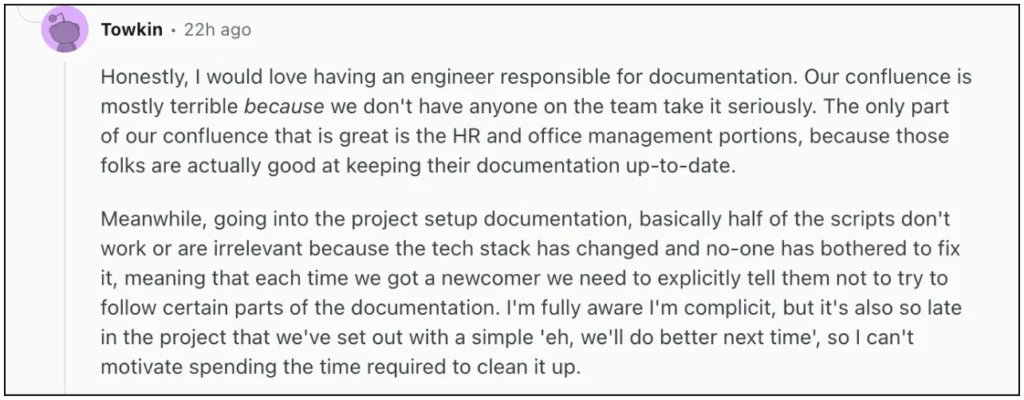

This commenter expounds on this challenge very specifically in the project, engineering and IT teams. Nobody is responsible for the documentation in these departments since writing documentation is neither incentivized nor ignoring documentation is penalized. This is why ownership structures and maintenance processes matter more than the tool itself.

Building documentation systems that people actually use

This Reddit research is exactly why we built AllyMatter differently. We watched too many companies choose between SharePoint’s complexity and Google Docs’ chaos. We saw teams implement AI before fixing their foundational documentation problems, then wonder why the AI hallucinated.

AllyMatter focuses on the unglamorous fundamentals: clear ownership through approval workflows, automatic freshness reminders so docs don’t rot, and audit trails that show who wrote what and when. These aren’t sexy features, but they solve the human problems that make or break documentation systems.

The AI stuff? It works better when your documentation is actually organized, maintained, and trustworthy. No amount of artificial intelligence can fix documentation that nobody owns or updates.

What actually matters

The path forward isn’t choosing between traditional documentation and AI-powered tools. It’s recognizing that sustainable documentation requires clear ownership, realistic maintenance expectations, and content quality that makes any retrieval method—human search or AI-assisted—actually useful.

Technology can reduce friction, surface relevant information faster, and automate routine queries. But the human problems of incentives, ownership, and organizational will remain. Companies that solve those problems first will get far more value from whatever tools they deploy.

The Reddit comments make it clear: the best AI can’t fix broken documentation practices. But good practices create a foundation where AI can actually deliver on its promises.